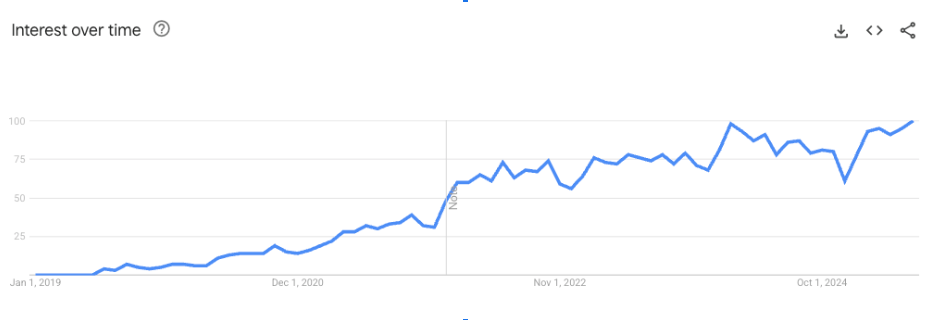

MLOps was nobody's job title in 2019. But over the past few years, the term's search volume has hockey-sticked as people realize that productionizing models is harder than it seems.

Simply put, MLOps teams make machine learning a reality by bridging research and exploration with reliable production systems.

Take a typical scenario: your data scientists build a recommendation model that crushes the baseline in testing—potentially worth $2M annually for a typical e-commerce site. Three months later, customers still see generic bestsellers. By the time it ships, multiple rewrites have broken the core logic. The revenue barely budges.

Ultimately, MLOps is more than just deploying models. It's about getting four very different teams to work in harmony. Each speaks a different language and that's where things tend to break down.

Here's how ML models typically go from idea to production:

- Data Scientists identify what data they need and request pipelines from Data Engineers

- Data Engineers build ETL pipelines to move data from production systems to warehouses

- Data Scientists use that data to create features in Python notebooks and train models

- MLOps Engineers translate the Python features to production languages (Java/Scala/Go) and deploy

Why ML teams get stuck

This process creates multiple friction points:

Features work differently in production: When MLOps engineers rewrite features in another language, small differences in implementation could lead to large drifts in model performance.

Testing changes requires complex infrastructure: Most teams have staging environments, but they rarely match production. Testing a single feature change means redeploying entire pipelines.

Problems span multiple systems: When production accuracy drops, the bug could be in the notebook, the data pipeline, the feature translation, or the deployment config. Each system is owned by a different team.

Sequential handoffs create bottlenecks: Each team blocks the next. Data engineers wait for requirements. Scientists wait for data. MLOps waits for finalized models. Weeks and months pass.

These problems compound with unstructured data. Need to parse receipts for expense categories? Extract sentiment from reviews? Now you need AI Engineers to build LLM pipelines. But AI Engineers who understand both ML and LLMs are rare. Most teams cobble together separate systems that don't talk to each other.

By the time a model reaches production, it's unrecognizable. Multiple rewrites. Multiple languages. Multiple systems. When something breaks, nobody has the full picture.

But what if features written in notebooks just worked in production? What if every team could contribute without rewrites or handoffs?

How Chalk breaks down silos

Chalk eliminates these handoffs by creating a single platform where all teams contribute without translation layers:

The new workflow - everyone contributes to the same platform:

- Data Engineers define data relationships at a higher level of abstraction - Chalk handles the pipeline complexity

- Data Scientists write features in Python that run with ms latency in production

- AI Engineers extract structured features from unstructured data using LLMs and embeddings

- MLOps enables self-service deployments with built-in governance

With Chalk: No rewrites. No handoffs. No disconnected systems.

Here's how each team works with Chalk:

Data Engineers: Declarative pipelines without the plumbing

- Define data relationships that work across training and production

- Configure caching, materialization, and time-windowed aggregations declaratively

- Connect to existing SQL sources (Postgres, Snowflake, Athena) with resolvers

- No manual ETL needed - Chalk handles query execution and optimization

Data Scientists: From notebook to production in minutes

- Write features in familiar Python on any notebook

- Test on branches with historical production data

- Push directly to production with

chalk apply

MLOps Engineers: Deploy and monitor without becoming the bottleneck

- Enable self-service deployments for all teams

- Monitor model performance and feature drift

- Configure gradual rollouts and version control

- Focus on deployment strategies, not infrastructure management

AI Engineers: Turn unstructured data into ML features

- Extract structured features from documents, receipts, reviews using LLMs

- Systematically evaluate which prompts and models extract the best features

- Deploy LLM-extracted features that compute alongside traditional features

- No separate vector DBs, embedding pipelines, or prompt tools to manage

What's next?

The promise of MLOps was always about velocity — shipping ML value faster. Chalk delivers on that promise by aligning teams around a shared platform instead of forcing handoffs between incompatible systems.

This blog post is just the beginning. In upcoming posts, we'll dive deep into how each team can leverage Chalk:

- Chalk for Data Scientists: From notebook to production in minutes

- Chalk for Data Engineers: Spin up data pipelines without the orchestration overhead

- Chalk for AI Engineers: Building production LLM apps that scale

- Chalk for MLOps Engineers: Deploying production-grade infra quickly, often, and reliably