TL;DR

Python is the language of choice for many ML teams — it’s flexible, expressive, and has the right ergonomics for real-time workflows. But it’s also slow.

To make Python faster, I built a Symbolic Python Interpreter to make Chalk’s “Python resolvers” into optimized Velox-native expressions — enabling high-performance execution without sacrificing developer experience.

This post explains the problem I encountered, why it’s so challenging, and how we solved for it.

Background

Machine learning teams want to move fast: ship models, incorporate new data sources, and react to real-time signals. But traditional feature pipelines — including ones written in Python — are slow, brittle, and not built for low-latency environments.

At Chalk, we’re building a real-time feature platform to change that. Chalk is a real-time feature platform designed to help teams incorporate new data sources, and iterate on ML models faster for real-time decision-making. At its core, Chalk allows engineers to define and compute features dynamically, ensuring that models have access to the freshest and most relevant data.

Chalk’s computation engine revolves around resolvers — composable blocks of computation that describe how data is stored or derived from existing data. Resolvers pull data from various sources like SQL databases or compute new values through Python functions, making feature engineering faster, more flexible, and easier.

Python resolvers: flexible, but not always fast

The most flexible type of resolver in Chalk is a Python resolver. With a Python resolver, you can:

- Specify input features.

- Write Python code to compute new features.

- Use any Python library, including Pandas, Polars, NumPy, and even web-scraping tools like BeautifulSoup.

Python is great for writing custom logic, but it comes with performance trade-offs. For example, consider a transaction-processing resolver that recalculates the expected total based on discounts, tax rates, and whether the transaction is taxable:

def compute_total(

subtotal: Transaction.subtotal,

discount: Transaction.discount,

tax_rate: Transaction.locale.sales_tax_rate,

is_taxable: Transaction.taxable,

) -> Transaction.total:

if discount is not None:

# Apply any discount, but don't allow it to become negative.

subtotal = max(subtotal - discount, 0.0)

if not is_taxable or tax_rate is None:

return subtotal

return subtotal * (1.0 + tax_rate)The flexibility of Python makes it easy to implement custom business logic, but running Python resolvers in real-time ML pipelines introduces performance bottlenecks.

How Chalk executes Python resolvers

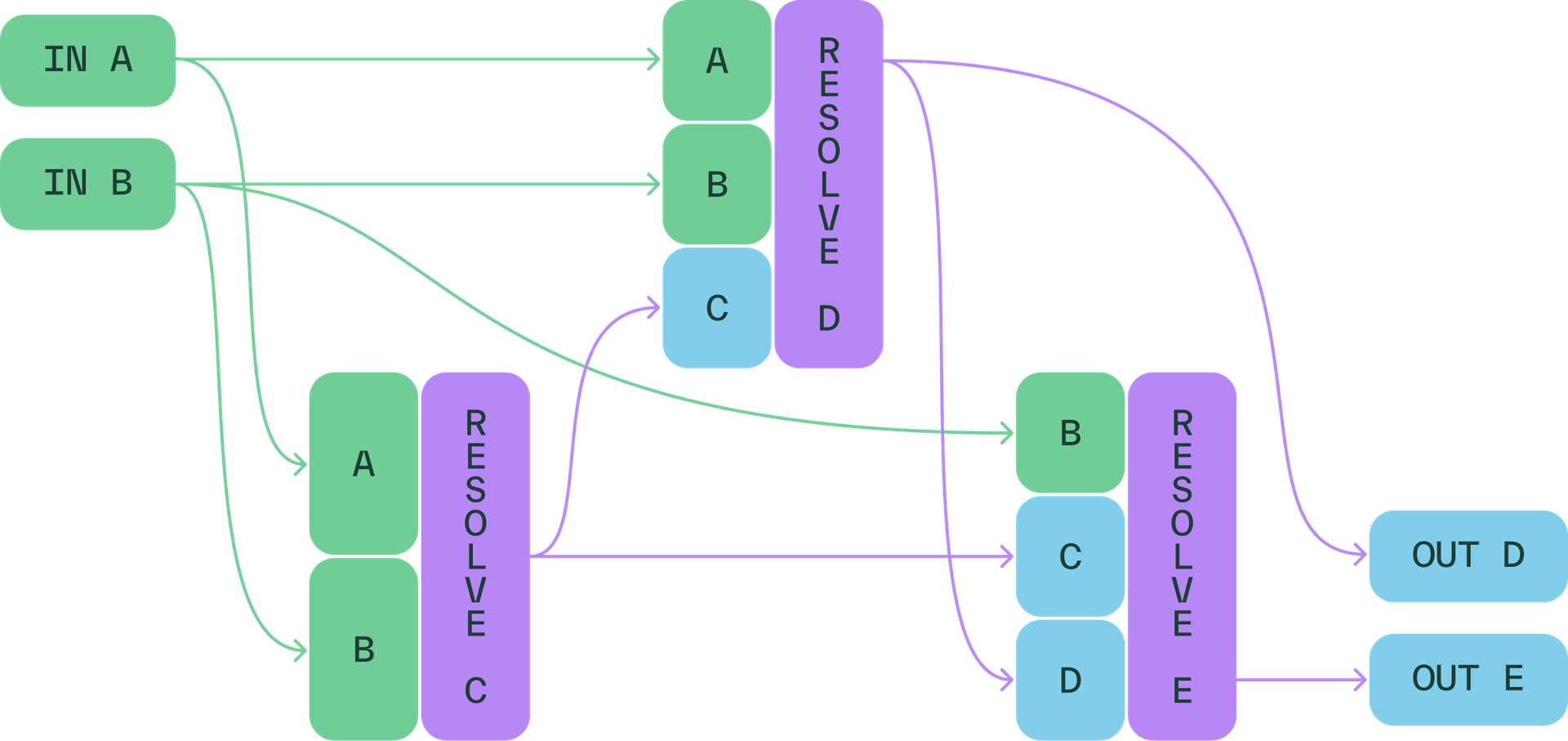

When executing a query, Chalk’s query planner determines which resolvers need to run to compute missing features. Resolvers may:

- Retrieve data directly from SQL databases (Postgres, Snowflake, BigQuery, etc.).

- Compute values using Python.

- Call hosted models (in Sagemaker, Vertex) in addition to 3P models and LLMs, and third-party APIs or perform additional transformations.

Velox Execution Engine

Once the query planner identifies dependencies, it constructs a Velox execution plan. Velox is an open-source execution engine that processes streams of table fragments efficiently. Chalk integrates Python resolvers by wrapping them as Velox operators, enabling execution at scale.

Python scalar resolvers execute in dedicated subprocesses:

- Data batches are dispatched to a Python subprocess.

- The subprocess converts table data into native Python values.

- The user-defined function executes on each row of data.

- The results are reassembled into a Velox table.

This design allows for the full flexibility of Python to compute arbitrary features, but introduces some performance challenges.

Why is running Python slow?

Python resolvers allow for powerful, expressive logic, but they come with trade-offs:

- Global interpreter lock (GIL) - Within each process, only a single pure Python thread can be executed, so a single process cannot fully utilize all CPU cores when running pure Python.

- Dynamic typing overhead - The Python interpreter must handle unpredictable types at runtime, introducing inefficiencies.

- Heavy value representation - Each Python value (including

int/float) is a heap-allocated, reference-counted object, which is memory-intensive. - Single-row processing - Modern CPU architectures include single-instruction-multiple-data (SIMD) operations which allow individual threads to perform many arithmetic operations in parallel. But since scalar resolvers run Python on each row of data individually (which provides the best developer experience), vectorization is not possible for queries with many rows.

To mitigate some of these issues, Chalk runs Python resolvers in multiple subprocesses (potentially across many computers). However, this still isn’t enough to efficiently serve high query volume at scale.

The origin of the Symbolic Python Interpreter

Chalk already runs all of our built-in compute-intensive operations as Velox operations, which avoids all of the above issues — Velox is natively multi-threaded, uses efficient, statically-known layouts for all values, and performs vectorized SIMD operations for compute bulk arithmetic operations out-of-the-box.

I built the Symbolic Python Interpreter to convert pure Python resolvers into equivalent Velox-native expressions at query-plan time, before execution. This allows our customers to write simple, easy-to-understand Python functions which operate on individual rows of data, but are executed as (potentially SIMD) operations on bulk tables with known type layouts. This transformation is conceptually similar to Python compilation tools like Numba, but Chalk’s compiler is specifically designed to support real-time ML workloads.

How the Symbolic Python Interpreter works

At query-plan time, each row-for-row Python resolver is scanned as a candidate for conversion into a Velox expression. The query planner obtains the resolver’s input types from its Feature Types class annotations – these are the types of the operator’s input table’s columns.

The Symbolic Python Interpreter then executes the function symbolically at query-plan time. Instead of being provided specific concrete values, the function is called with symbolic values as inputs - these represent trees of computations (consisting of function calls, input columns, conditional branches, and constants). These symbolic values propagate through the function as it is executed symbolically.

For example, a very basic function like

@online

def compute_total(

subtotal: Purchase.subtotal,

tax: Purchase.tax,

) -> Purchase.total:

if tax is None:

return subtotal

return subtotal + taxbecomes a symbolic tree of columnar operations:

if_else(

is_null(column("Purchase.tax")),

column("Purchase.subtotal"),

add(column("Purchase.subtotal"), column("Purchase.tax")),

)Each sub-expression within the tree tracks both the exact Python type (allowing Python’s dynamic semantics to be precisely emulated) along with the Velox type in the resulting expression (allowing the expressions to use efficient, compact layouts for each sub-expression).

The interpreter also tracks control-flow information, so that flow-sensitive information can be used to eliminate dynamic checks and accelerate dynamic functions. For example, in the above example, even though tax has Python type Optional[float], the interpreter is able to statically reason that tax must not be None in the final expression because the function would have already returned.

Unknown or unsupported functions cause the interpreter to bail out and fall back to the original generic Python subprocess invoker, which is capable of running any Python code.

At the end of the function, if symbolic execution was successful, the query planner has obtained a single complex Velox expression representing all of the Python resolver’s code, which emulates Python semantics exactly (including nullability, short-circuiting, and loops over list-typed features). Then, Python execution can be replaced entirely by a Velox projection operator which computes the resolver’s output as an additional column.

How symbolic interpretation accelerates execution

By bypassing Python’s runtime overhead, this approach delivers substantial performance improvements. The resulting expression uses a compact, efficient representation for each input, output, and intermediate sub-expression, avoiding the need for excessive heap allocations or dynamic checks on every function call and arithmetic operation.

Velox natively runs all operators on multiple threads, allowing for trivial CPU scaling as multiple requests are handled concurrently or one request scales to very large numbers of rows. In addition, Velox operates on batches of rows, so each operation can trivially make use of SIMD instructions when dealing with multi-row inputs since the row-by-row function is converted into a columnar vector expression.

The impact

By eliminating Python’s inefficiencies, Chalk’s Python resolvers now:

- Run in parallel without unnecessary overhead.

- Translate to Velox-native expressions for optimized execution.

- Execute faster without requiring users to write specialized performance-tuned code.

Since Python resolvers are automatically accelerated, our users can focus on defining features and experimenting, instead of spending time on a tedious and extraneous “productionization” step. Chalk’s users can just write ordinary Python code, and it’s already ready to ship for production workloads.

Final thoughts

Our work on the Symbolic Python Interpreter changes how Chalk executes Python resolvers. By eliminating Python’s runtime inefficiencies, Chalk now:

- Runs Python resolvers in parallel.

- Identifies vectorizable expressions for Velox-native execution.

- Uses a Symbolic Python Interpreter to analyze and optimize function logic automatically.

By combining Python’s ease of use with a highly optimized execution engine, Chalk ensures that real-time ML workloads remain both flexible and fast. Stay tuned for further optimizations as we continue pushing the boundaries of real-time feature computation.

Defining new feature resolvers used to mean choosing between expressiveness and production-ready efficiency. With Chalk’s accelerated Python resolvers, now you get both — flexible and fast.