Chalk for MLOps

Build fully observable ML pipelines with automatic data lineage on every query.

TALK TO AN ENGINEER

Why MLOps engineers choose Chalk

Built-in versioning and audibility

Automatic versioning and audit trails for consistent, reproducible feature definitions

Feature monitoring and alerting

Monitoring with alerts so features remain fresh, accurate, and drift-free

Infrastructure observability

Logs for debugging, Kubernetes cluster activity, and system-level metrics with alerts

On-demand pre-computation

On-demand pre-computation that replaces brittle pipeline logic and manual orchestration

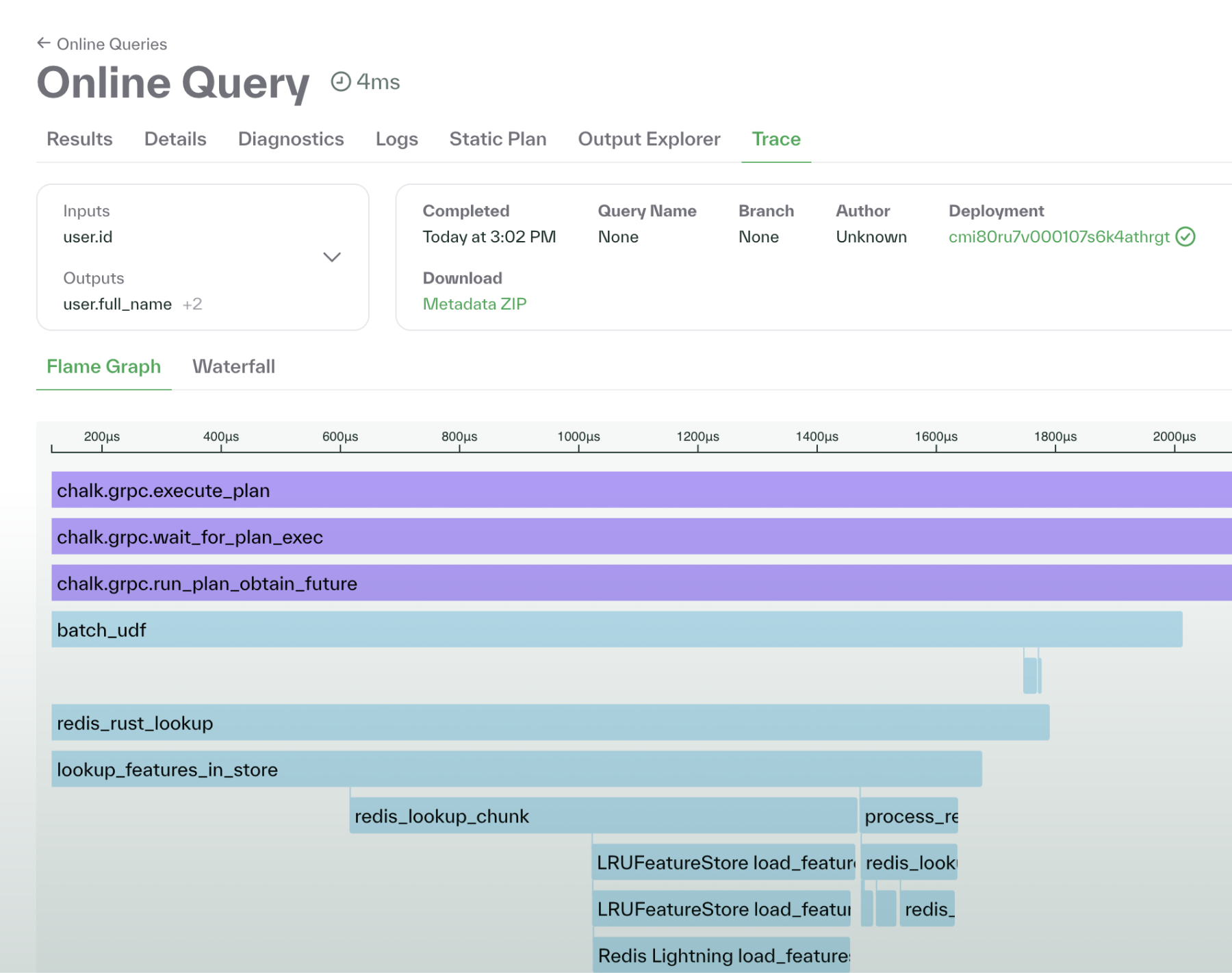

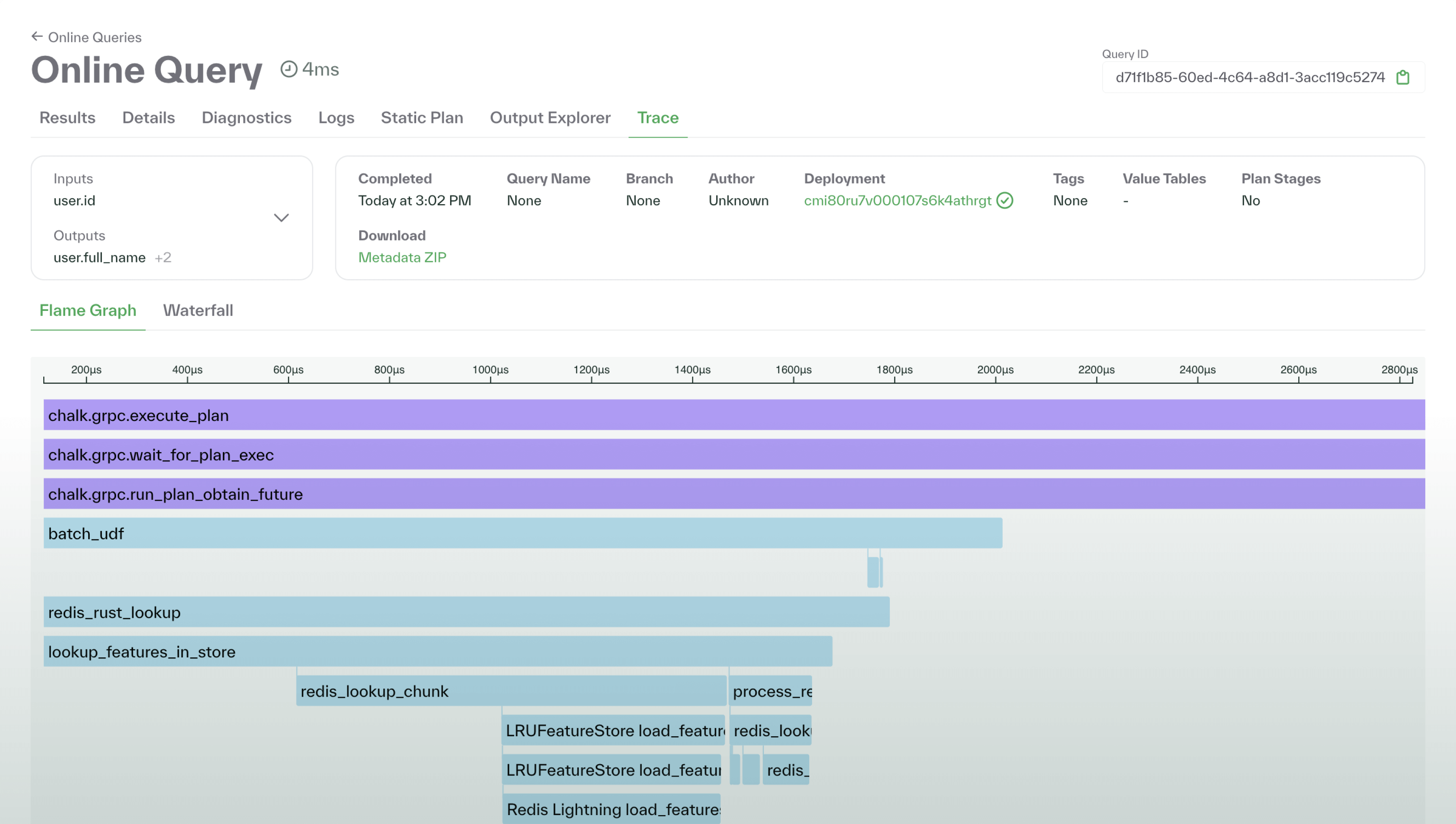

Tracing for query performance diagnosis

Tracing for query performance diagnosis

Tracing gives teams deep visibility into how queries run inside Chalk. Each resolver and model call is instrumented and timed, making it easy to identify performance bottlenecks and understand why a query behaves the way it does.

Tracing docs

Chalk makes it easy to get started, and its isolation model keeps everything safe by default. Teams don’t block each other anymore.

Melvin Lew MLOps Engineer

Reproducible and auditable features by default

Reproducible and auditable features by default

Chalk applies modern software engineering to ML workflows:

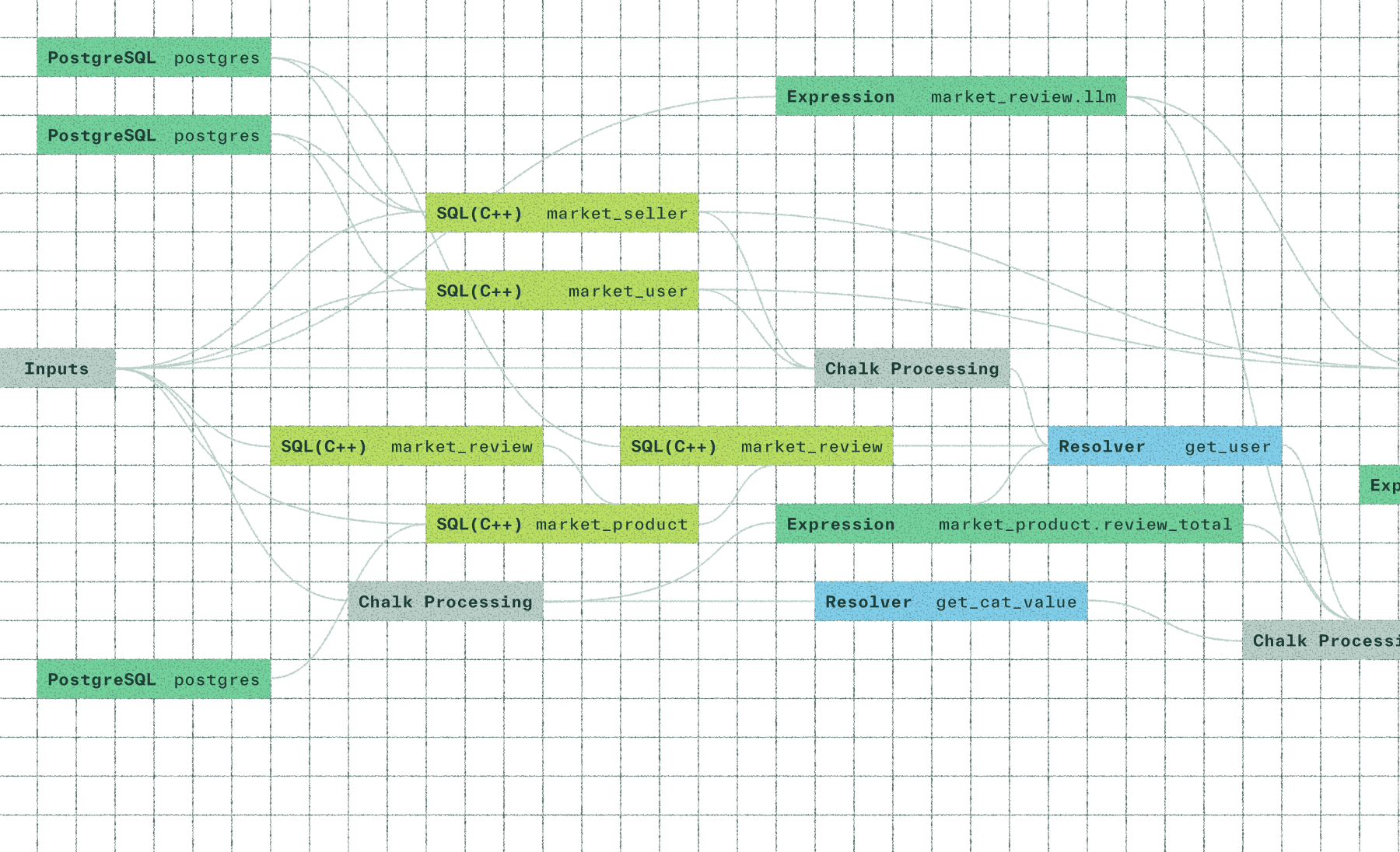

- View lineage from data sources through transformations to final outputs, with version history captured automatically

- Browse and search historical versions of every feature, query, resolver, and deployment in one unified feature catalog

- Audit changes and reproduce results instantly with complete traceability built into the dashboard

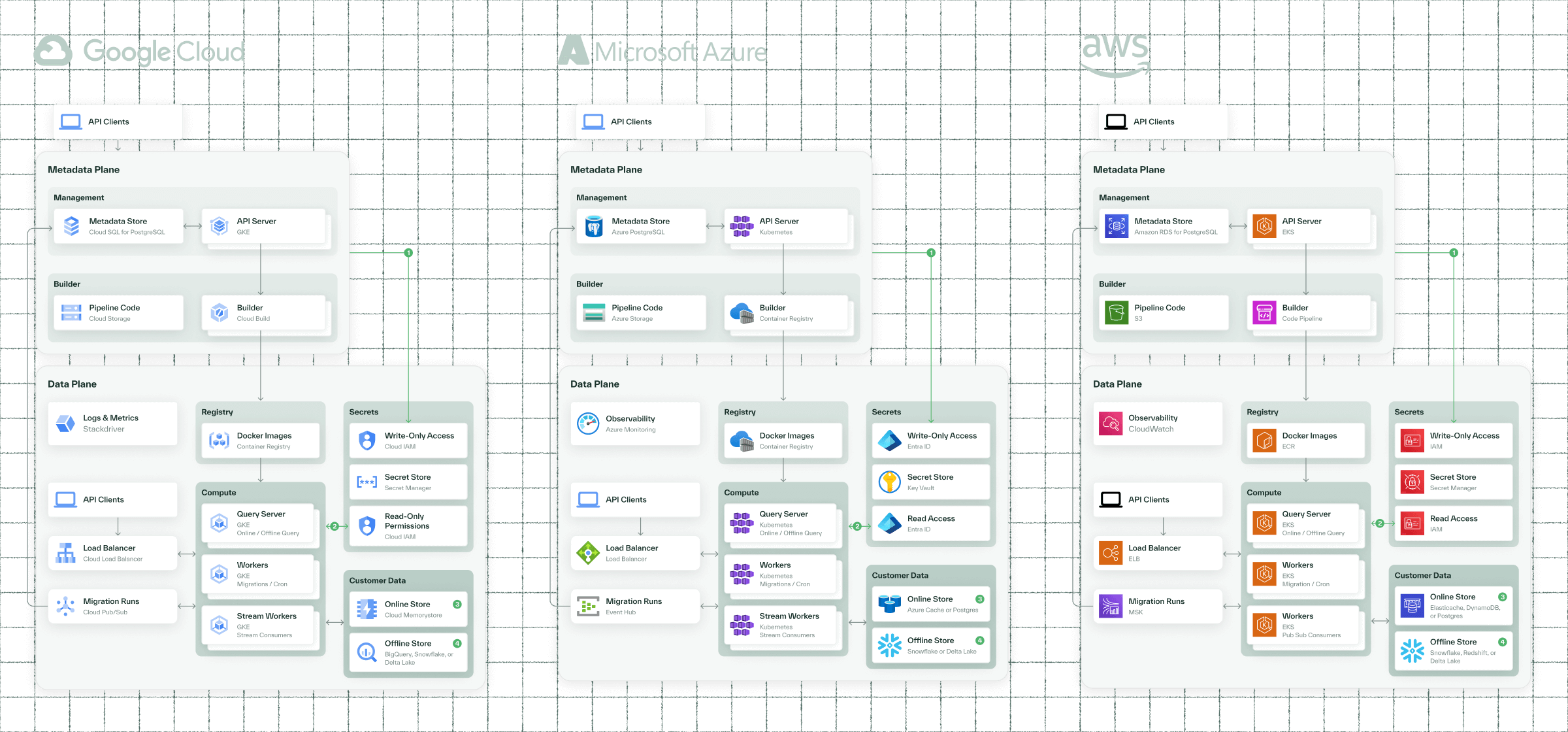

Built for scale and trust

Chalk runs in your cloud (AWS, GCP, Azure), meeting enterprise standards for security, compliance, and deployment flexibility.

DEPLOY IN YOUR CLOUDExplore how Chalk works

Ready to ship next-gen ML?

Talk to an engineer and see how Chalk can power your production AI and ML systems.

TALK TO AN ENGINEER