Power search and ranking

with real-time features

Chalk powers search models by computing relevance features and embeddings at query time. Teams leverage Chalk to adapt results instantly based on user context, behavior, and intent, while maintaining full control over ranking logic and data.

TALK TO AN ENGINEER

Why Chalk for

search and ranking models

Retrieve and rank with embeddings in real time

Retrieve candidates using embedding similarity, and re-rank results with live behavioral and contextual features at query time.

Serve search features with ultra-low latency

Compute features and embeddings on demand with predictable, single-digit millisecond latency, supporting high query volume and large candidate sets.

Maintain point-in-time correctness

Use the same feature definitions for training and production search traffic to prevent online and offline drift as ranking logic evolves.

Retrieve and rank with embeddings in real time

Retrieve candidates using embedding similarity, and re-rank results with live behavioral and contextual features at query time.

Serve search features with ultra-low latency

Compute features and embeddings on demand with predictable, single-digit millisecond latency, supporting high query volume and large candidate sets.

Maintain point-in-time correctness

Use the same feature definitions for training and production search traffic to prevent online and offline drift as ranking logic evolves.

Improve relevance with decision-time ranking

Deliver more relevant results by ranking with embeddings and live context computed at query time, not static scores generated hours earlier.

Adapt rankings without pipeline rewrites

Change ranking logic and features without rebuilding batch jobs or maintaining separate online and offline paths.

Scale search without relevance regressions

Serve billions of searches with consistent latency while validating ranking changes before they reach production.

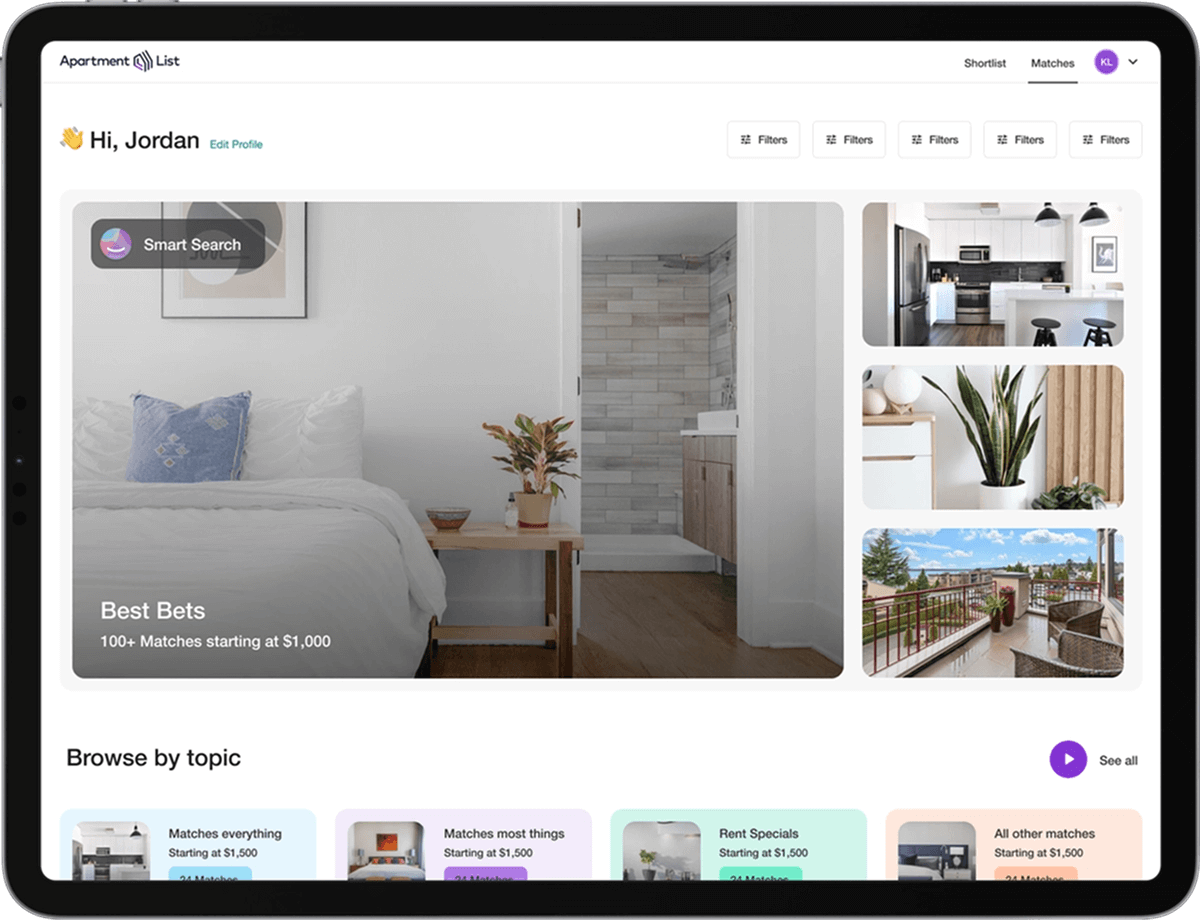

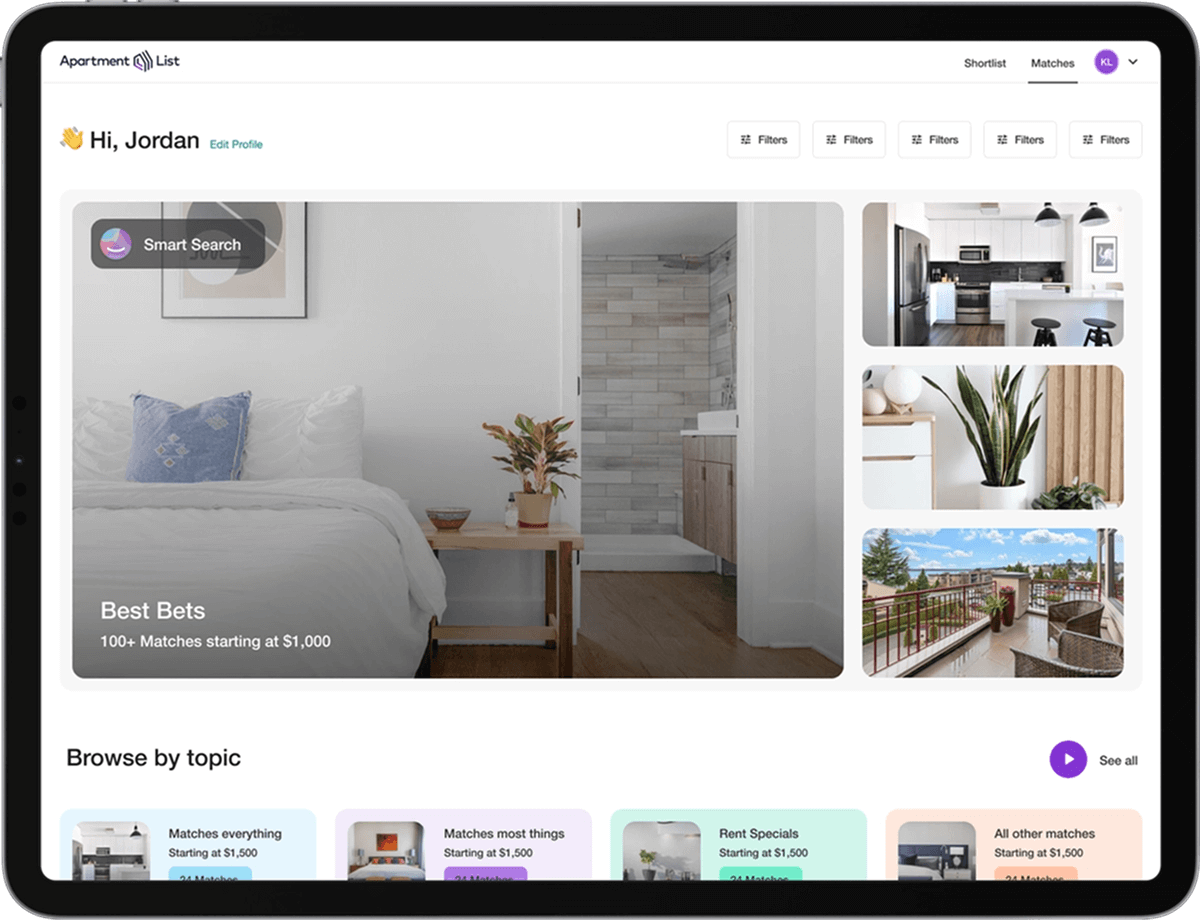

Chalk’s performance directly affects the quality of our search and discovery models, which power everything from price flexibility to apartment ranking. The ability to call real-time features without dealing with stream complexity has been huge for us.

Matt Weale Software engineer

Rank results based

on semantic relevance.

Retrieve candidates using query and item embeddings, then re-rank results with live behavioral and contextual features so relevance reflects meaning, not just keywords

Rank results using

live supply and availability.

Compute relevance, availability, and demand features at query time to rank listings based on what is actually available right now, not static indexes or delayed aggregates.

Personalize GIS results using

live user intent and geospatial data.

Rank listings using real-time user preferences, location context, and session behavior so results adapt instantly as users refine filters and searches.

Live recommendations at scale

Live recommendations at scale

Whatnot delivers dynamic recommendations during live streams with Chalk, serving user and product features instantly to maximize engagement.

Vector search with Chalk

Run nearest-neighbor search on embeddings at query time and re-rank results using the same production feature definitions.

Nearest neighbor docsSearch and ranking

at scale

Search and ranking

at scale

Apartment List uses Chalk to compute ranking features at query time, keeping search results aligned with live user intent and inventory changes. This allows the team to personalize search results, iterate on ranking logic safely, and maintain consistency between offline evaluation and production behavior.

Read story

Additional resources

Build search systems

that adapt instantly.

Get started with Chalk today and transform your ML workflows.

TALK TO AN ENGINEER