A feature store is a centralized system that manages and serves machine learning features; the transformed data that models use to make predictions. It ensures features are defined once and can be consistently reused across training and production, keeping models accurate and reliable.

As more companies adopt machine learning for real-time applications such as fraud detection or medical diagnostics, feature stores have become essential. They solve common challenges like keeping training and serving pipelines in sync, reducing recomputation, and preventing feature drift. By acting as the single source of truth for features, a feature store enables engineering teams to move faster, collaborate more effectively, and scale models with confidence.

Why feature stores matter for ML teams

Machine learning teams often discover that the hardest part of building models isn’t the model itself, it’s managing the data that powers it. Without a feature store, organizations face recurring problems that slow down delivery, increase costs, and make models less reliable.

Common challenges include:

- Inconsistent training vs production features: Features are often reimplemented when models move from notebooks into production, which leads to drift and mismatched results between training and inference.

- Duplicate work across teams: Different teams frequently rebuild the same features in parallel, wasting time and slowing experimentation velocity.

- Inefficient recomputation: Complex transformations get recomputed repeatedly instead of being reused, driving up infrastructure costs and delaying iteration cycles.

- No single source of truth: Without a central system, feature definitions live in scattered scripts and pipelines, making governance and compliance nearly impossible.

- Hard-to-debug lineage: When something goes wrong, teams struggle to trace how a feature was built, what data sources it depended on, and where it diverged. This erodes trust in models once they’re deployed.

Feature stores were built to solve these problems. By centralizing feature definitions and making them reusable across training and production, they ensure consistency, reduce wasted effort, and give ML teams the confidence to scale models into production.

Core benefits of a feature store

Adopting a feature store solves not only common pain points, but it unlocks new capabilities that make MLOps teams faster, more consistent, and easier to trust.

- Consistency: Feature stores ensure training and production use the exact same feature definitions. This online/offline sync eliminates drift, so predictions in production match the results you saw during model development.

- Real-time serving: Fresh features can be delivered in milliseconds, powering critical applications like fraud detection, personalization, and recommendations. Instead of relying on stale batch data, teams can make decisions with the most up-to-date signals available.

- Faster experimentation: By centralizing and reusing features, teams can build new models without starting from scratch. This accelerates iteration cycles, reduces duplicate work, and helps organizations scale experimentation across multiple teams and projects.

- Feature lineage & governance: A feature store tracks how features were created, their dependencies, and how they’ve evolved over time. This lineage makes debugging easier, supports compliance requirements, and builds confidence in the reliability of production models.

Common use cases for feature stores

Feature stores are increasingly seen as core infrastructure because they make it easier to deliver reliable, low-latency data to models. Here are some of the most common applications in real-world ML systems:

Fraud detection

Real-time fraud systems rely on streaming features like transaction history, device fingerprints, and geolocation signals. With a feature store, these inputs are served in milliseconds, helping financial institutions flag suspicious activity before it reaches the customer.

For a detailed case study on how a feature store can be used to build a fraud detection system, refer to Feature Store at Work: A Tutorial on Fraud and Risk

Recommendations

Recommendation engines depend on up-to-date behavioral data (what a user has watched, clicked, or purchased recently). A feature store ensures those signals are fresh and consistent, powering more accurate and relevant recommendations.

Personalization

From media streaming to e-commerce, personalization requires fast access to a user’s latest activity. Feature stores can stream events like likes, shares, or browsing history at low latency, enabling models to respond in real time.

Compliance

In regulated industries like finance and healthcare, reproducibility is critical. Feature stores support 'time travel,' making it possible to reconstruct what a feature looked like at any point in time, essential for audits, debugging, and compliance reporting.

These use cases show why feature stores are now standard infrastructure for modern ML teams.

Feature store vs data warehouse vs vector database

When exploring ML infrastructure, it’s easy to confuse feature stores with other systems like data warehouses or vector databases. While they sometimes overlap, each serves a distinct purpose.

- Data Warehouse: Data warehouses excel at batch analytics, business reporting, and aggregating historical data. They aren’t designed for low-latency inference or temporal consistency, which are essential for ML models in production.

- Vector Database: Vector databases are optimized for similarity search and embeddings, great for use cases like semantic search or recommendation retrieval. However, they don’t provide feature engineering, consistency guarantees, or feature lineage tracking.

- Feature Store: Feature stores are purpose-built for ML workflows. They handle feature computation, ensure online/offline consistency, enable feature reuse, and provide lineage so teams can debug, audit, and govern features confidently.

System

Strengths

Limitations for ML Workflows

- Data Warehouse

- Batch analytics, business intelligence

- Not built for real-time inference or time travel

- Vector Database

- Embedding storage, similarity search

- Doesn’t handle feature engineering or lineage

- Feature Store

- Feature computation, serving, consistency, lineage

- Purpose-built for bridging training and production

Each system has its place, but only feature stores are designed to reliably bridge the gap between training and production.

Feature store architecture explained

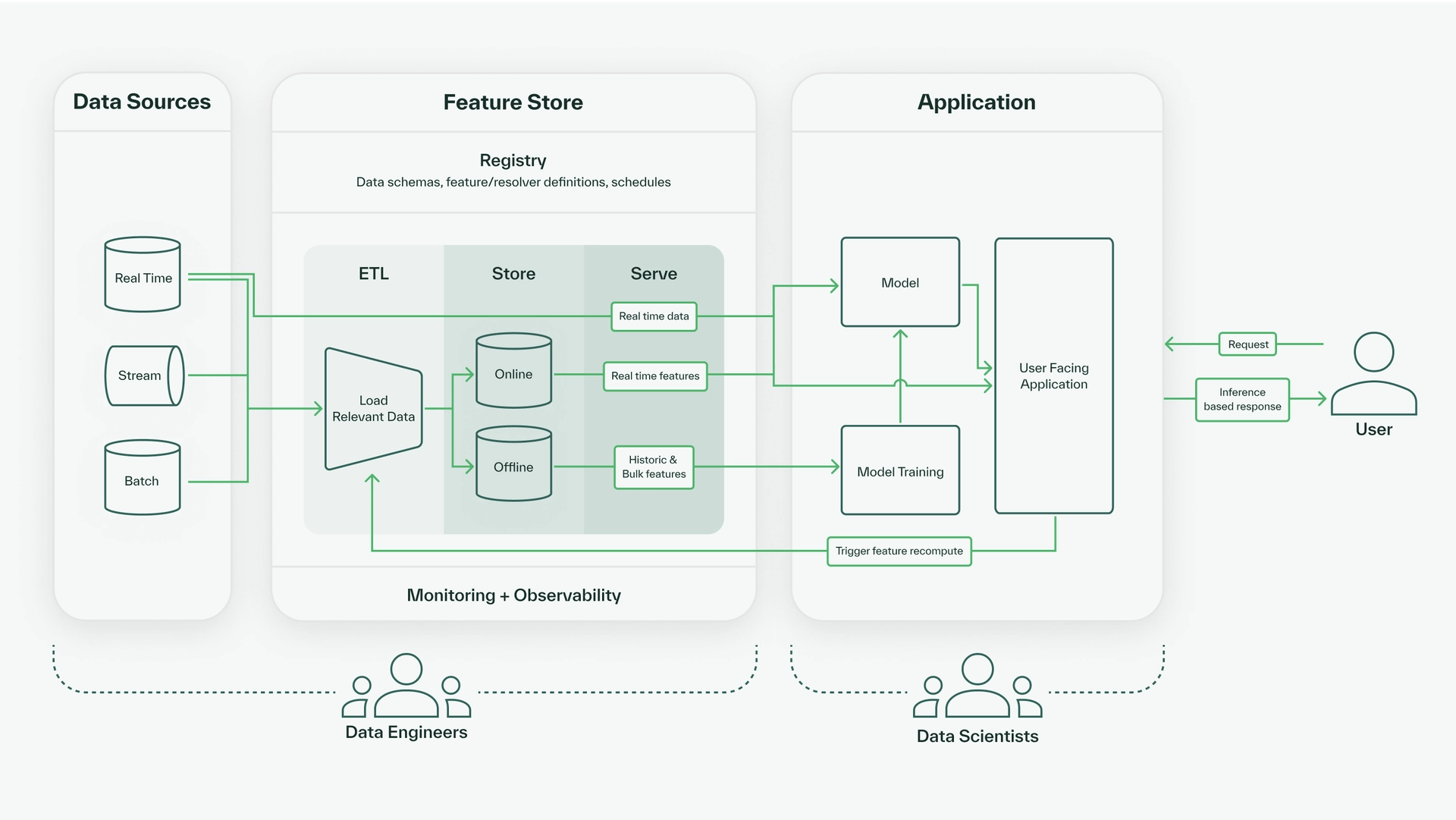

Feature stores are defined by four core components in the flow of data:

- Sourcing: connecting to raw data sources,

- Transforming: loading and running transformations on data,

- Storing: persisting transformed features, and

- Serving: providing access to transformed data.

There are two additional (though equally important) components that extend naturally from the core components and respond to the challenges that feature stores address:

- Monitoring: detecting feature drift, shifts in latency and storage usage, and data freshness.

- Experimenting: iterating on feature and resolver definitions during model training.

These two components aren’t definitional, but they are essential parts of making transformed features production-grade.

Sourcing

Feature stores are built to be data source agnostic. They implement connectors that allow data to flow in from your company’s upstream data sources, making them easy to integrate into any existing data architecture. Common categories of data sources include real-time, streaming, and batch data sources from which the feature store can load feature data and all the input data required to generate feature data.

Transforming

Feature stores compute and persist transformed data. Users write most of their custom logic and code for the transformation step of a feature store. The goal of transformation is to specify the desired structure of your features and the logic with which to compute feature values. These specifications comprise the registry for the feature store, which serves as a schema for all current and historical feature definitions. The registry should contain definitions for the input data sources or features and specific computations required to determine a feature value.

Once the registry has been defined and deployed, then feature stores further optimize performance by performing these data transformations responsively. Rather than running all the computations specified in the registry proactively on all possible upstream data, a well-implemented and efficient feature store runs transformations on demand to compute data that has not already previously been computed and persisted in its stores.

When a feature store receives a query, it determines which feature values are already computed and stored. For the values that need to be freshly computed it determines the registry definitions that map to each feature value, runs those specified transformations, and then returns and persists the requested feature data.

Storing

Machine learning teams require two forms of data access: real-time, to serve modeled or computed results to customers (for example, a bank might want to know whether a transaction that just happened is fraudulent and an ecommerce company might want to recommend content to a user based on the products they’ve just looked at), and retroactive, to generate data for model training, to monitor how features are changing over time, or to run analytic queries.

These are incredibly different access patterns, but their outputs need to be consistent—the data used to train a model must look like the data used to make predictions. Combining them under one interface guarantees this consistency and improves efficiency. Feature stores like Chalk accomplish this through two abstractions: an online store and an offline store. The online store is a key value store responsible for low latency serving of features in real-time—it remembers the latest computed values of your features based on their primary keys. The offline store is responsible for “remembering” every feature you’ve computed. It can also be queried (for instance, to access historical data).

Serving

Feature stores typically provide an interface for requesting data from either the offline or online store. At a high level, access to a feature store is divided into online queries and offline queries. The goal of online queries is to return the latest value for a feature as quickly as possible (either by returning the values from a cache or by running the necessary resolvers to calculate the requested features). The goal of offline queries is to either:

- Retrieve historical data that has already been calculated,

- Warm the online store cache (through an offline-online ETL process), or

- Run batch jobs to generate features that don't need to be served through the online store.

There are multiple ways to run offline and online queries, but some of the most common methods include:

- Through clients (implemented in different programming languages),

- By making HTTP requests to a REST API,

- Through scheduled orchestration of queries, which will run queries on a schedule similar to scheduled ETL jobs.

Monitoring

Monitoring is a critical, yet less rigorously defined, part of feature stores. Knowing how your features and resolvers are behaving (or misbehaving) can surface previously invisible or inaccessible information, revealing subtle bugs early.

Because feature stores centralize feature computation, they can provide a comprehensive view into your features, including: the latency of your queries, the relationships between your features, the consistency of a feature’s distribution over time, and the number of times a particular resolver is being run.

A good feature store allows you to define very granular metrics and connect them to your existing monitoring and alerting systems. For instance, if you have a feature defining whether a transaction is likely fraudulent (e.g. Transaction.is_fraud), you could configure a monitor that alerts an external service (like PagerDuty) if the percentage of queries marking transactions as fraudulent is suspiciously high or low. This allows instant visibility into critical decisions made by your ML system that otherwise might be hard to detect.

Additionally, some feature stores make it easy to export logs to common metric collectors, such as Prometheus, OpenMetrics (Datadog), and NewRelic.

Experimenting

A well-implemented feature store lets you experiment and collaborate on features and pipelines. Typically, clients create multiple environments for development and testing for their feature stores. This allows feature transformation code to be tested and evaluated before it is embedded in production pipelines. In the same vein of testing in isolated environments, feature stores can also enable engineering best practices for collaboration through isolated deployments within an environment, similar to version control, allowing for concurrent iteration.

Do you need a feature store?

Not every team needs a feature store right away. For some, existing pipelines and data platforms may be enough. But as machine learning projects grow in scope and complexity, the need for a dedicated system to manage features becomes clear.

When you might not need one (yet)

If your team is only running a handful of offline models and feature definitions live comfortably within existing ETL pipelines, a feature store may be overkill. Similarly, if real-time serving isn’t critical to your use case, you can often get by with a simpler setup.

Signs you’re ready for a feature store

Most ML teams reach a tipping point where managing features without a centralized system starts slowing progress. You should strongly consider adopting a feature store if:

- You need consistency between training and production features to prevent drift.

- Your team spends significant time rewriting Python code for production environments.

- You require low-latency, real-time inference for fraud detection, recommendations, or personalization.

- Multiple teams are rebuilding the same features, slowing iteration velocity.

- You need feature lineage and time travel for compliance, debugging, or audit requirements.

Feature stores were designed to solve these problems, giving ML teams a single source of truth for features and a reliable bridge between prototyping in notebooks and production deployment.

Conclusion

Feature stores have become critical to machine learning platforms at companies of all stages, in all industries, and all sizes of ML and data teams. If your team is looking to ship production-grade machine learning confidently and quickly, a feature store is table stakes.

Chalk is data source agnostic with clients in multiple programming languages and lightning-fast deploys and queries for development. For a best-in-class feature store that developers love to use you can explore Chalk’s feature store for yourself, please book a demo.