This quarter, we delivered platform-level improvements that extend Chalk’s ability to support complex, production-grade ML systems. These changes focused on improving how teams define complex features, observe and debug runtime behavior, control infrastructure at scale, and integrate Chalk into their production ML workflows.

Here’s what’s new.

LLM feature support: evaluation and multimodal inputs

LLM workflows are now first-class citizens in Chalk’s feature platform. This quarter, we launched native support for prompt evaluations, enabling teams to define, run, and compare prompts across models and datasets.

import chalk.prompts as P

chalk_client.create_dataset(

input=df,

dataset_name="Churn 2024",

)

chalk_client.prompt_evaluation(

dataset_name="Churn 2024",

evaluators=["exact_match"],

reference_output=User.churned,

prompts=[

P.Prompt(

model="gpt-4.1",

messages=[

P.user_message("Determine the churn prob given login at {{User.last_login}}")

],

temperature=0.2,

),

P.Prompt(

model="claude-sonnet-4-20250514",

messages=[

P.user_message("Determine the churn prob given login at {{User.last_login}}")

],

temperature=0.3,

)

],

)Evals can also run directly from Chalk’s dashboard in addition to a Notebook. Metrics like latency, accuracy, and token usage are available alongside feature outputs. Once a prompt performs reliably, it can be deployed as a production feature, just like any other resolver.

We also expanded the chat resolver, chat.complete(), to support multimodal prompts — combining image and text in a single call. This makes it easier to build GPT‑4o–style LLM workflows directly in Chalk.

@features

class Receipt:

image_url: str

image_response: P.MultimodalPromptResponse = P.completion(

model="gpt-4o",

messages=[

P.message(

"system",

[

{"type": "input_text", "text": "describe this image"},

{"type": "input_image", "image_url": _.image_url},

],

),

],

)Feature flexibility

Production systems need flexible building blocks. We added 50+ new Velox expressions, including support for scikit-learn (e.g. classifiers, regressions, random forest), data structure operations (map_get, proto_serialize, struct_pack), and various encoding functions (sha, spooky_hash). Scikit-learn models can also be hosted with Chalk instead of calling out to hosted model providers like Sagemaker.

import chalk.functions as F

from chalk import features

@features

class User:

id: str

total_spend: float

avg_order_value: float

days_since_last_purchase: int

customer_tenure_days: int

p_churn: float = F.sklearn_logistic_regression(

_.total_spend,

_.avg_order_value,

_.days_since_last_purchase,

_.customer_tenure_days,

model_path=os.path.join(

os.environ.get("TARGET_ROOT", "."),

"churn_lr.joblib",

),

)SQL surface and acceleration visibility

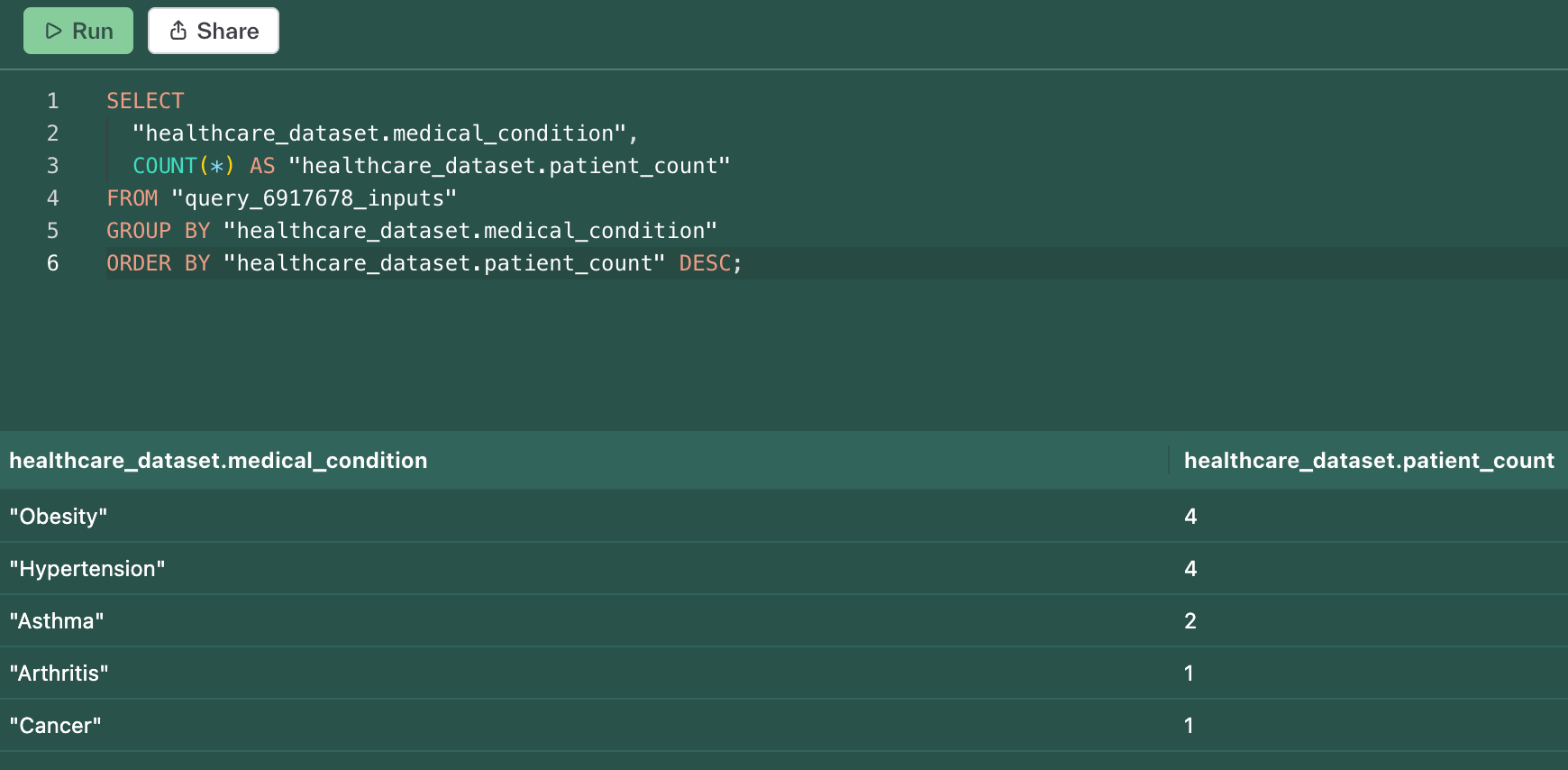

We improved support for SQL-native workflows and gave teams more transparency into performance:

- We added native support for ClickHouse as a SQL data source.

- We added the ability to easily query historical values (Offline Store), underlying data sources, and resolvers that return DataFrames.

- We expanded our acceleration documentation to specify which Python constructs (e.g.,

zipandenumerate) are compiled into Velox expressions.

Observability and runtime inspection

Visibility into how features behave in production is critical.

- We introduced workspace-level audit logs that capture API activity, user/service actions, IPs, and trace IDs.

- We added support for Parquet exports of online queries, including inputs, outputs, query plans, and execution context.

- We built an offline query input explorer to surface SQL and parameter inputs used during dataset builds.

These additions improve auditability for teams running Chalk in regulated or high-risk domains.

Infrastructure controls and SDK updates

Data platform teams need tighter control over how compute is allocated and scaled:

- We introduced per-pod rate limiting to control query throughput and concurrency under load.

- We added node pool and pod-level isolation to enforce resource boundaries and prevent noisy neighbor effects.

- We released a new TypeScript gRPC SDK with full support for

query,bulkQuery, andmultiQuery, matching the HTTP client interface.

These changes reduce noisy neighbor issues and allow Chalk to behave predictably under bursty or latency-sensitive workloads.

Chalk in action: lending, fraud, identity

Real-world usage of Chalk continues to expand — here’s how customers are using it today:

- MoneyLion uses Chalk for real-time fraud detection and personalization, accelerating time-to-production and improving feature freshness. Read more.

- Mission Lane rebuilt its credit underwriting system with Chalk, moving to live features and explainable model inputs. Read more.

- Verisoul uses Chalk for real-time identity risk scoring across heterogeneous data, blending ML into their decisioning system. Watch demo.

Learning resources

To help teams understand where and how Chalk fits into production ML stacks, we published:

- Chalk for AI engineers: Learn how to build LLM and multimodal pipelines using prompt-time feature engineering.

- Chalk’s platform architecture: Understand how Chalk is architected to support high-throughput, low-latency feature computation.

- Evaluating features: Learn how to define, run, and analyze prompt evaluations at scale using Chalk’s native tools—combining dataset-backed metrics, model outputs, and deployment-ready workflows.

- Chalk's YouTube channel: Explore technical walkthroughs, real-world demos, and architecture deep dives from our team and partners.